Generalizable EEG encoding models with naturalistic audiovisual stimuli

Findings summarized from Desai et al. 2021 (Journal of Neuroscience)

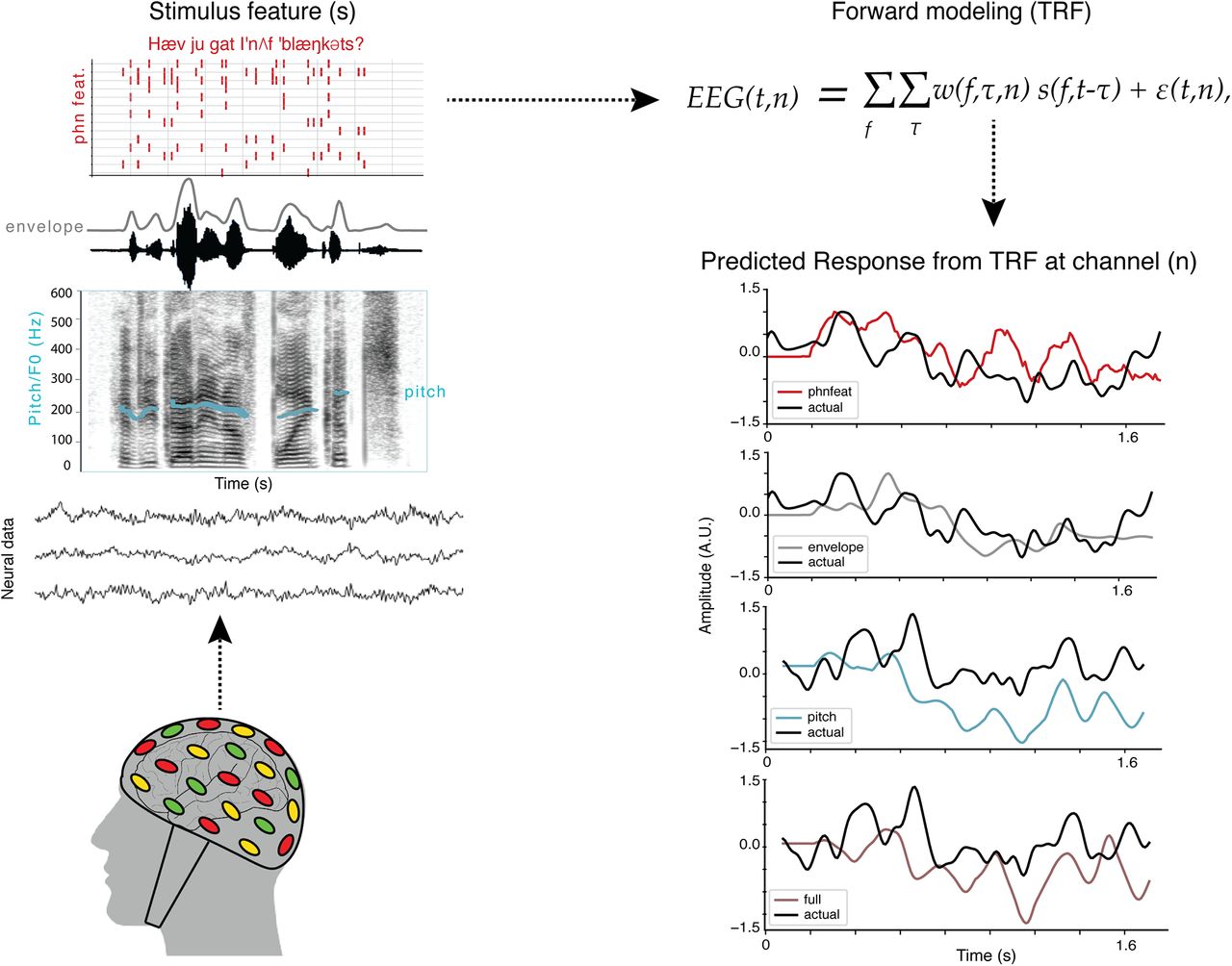

Understanding speech and language is something that humans engage in daily, and for many it is relatively effortless. However, the brain’s ability to comprehend speech in a naturalistic and noisy environment is a complex process. To understand this process, scientists conduct experiments using electrophysiological measurements such as electroencephalography (EEG), a non-invasive method of recording brain activity from the level of the scalp, and various types of stimuli. These stimuli range from simple “clicks” or “tones”, to syllables, or short sentences. Unfortunately, these stimuli are limited in understanding how the brain processes speech and sounds in a naturalistic environment. Additionally, listening to a single tone of a syllable such as “ba” or “ga” or sentences repeatedly as an EEG participant is tedious and boring.

We used naturalistic stimuli, specifically children’s movie trailers, to understand how the brain processes speech and audiovisual information. A primary motivation of this study was to see if it possible to replace more controlled experiments, such as sentence listening, with stimuli that are more engaging and fun to listen to. This is particularly important as some of my dissertation research involved working with children in an in-patient hospital environment, so interesting tasks mean a better experience for both researcher and participant. These results showed that it was possible to robustly encode speech using acoustically rich and naturalistic stimuli such as movie trailers and these results were comparable to understanding how the brain processes speech in a noise-free sentence listening setting [1].

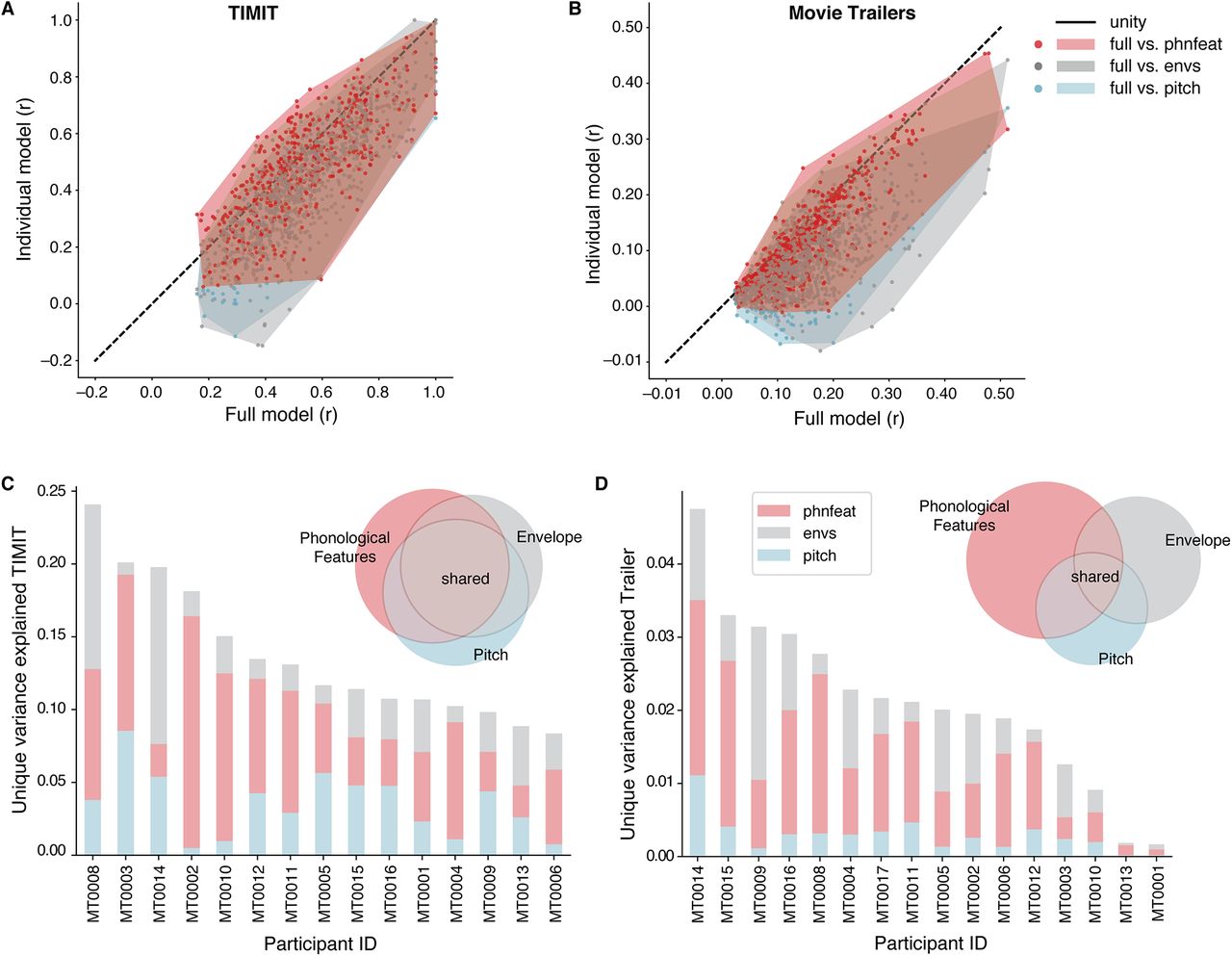

Our results showed strong tracking of acoustic and phonetic information in both the TIMIT and movie trailer stimuli. The model performance for TIMIT was generally better compared movie trailers which is likely attributed to the additional sound sources such as music, overlapping speakers, and background noise in the trailers compared to isolated speech from the TIMIT sentences. However despite the differences in the acoustic content of these contrasting stimuli, we found that it is possible to use a more naturalistic audiovisual stimuli and still robustly encode neural responses to speech information.

To learn more about the findings, check out the paper here: Desai et al. 2021