Dataset size considerations

Findings summarized from Desai et al. 2023 (Frontiers in Human Neuroscience)

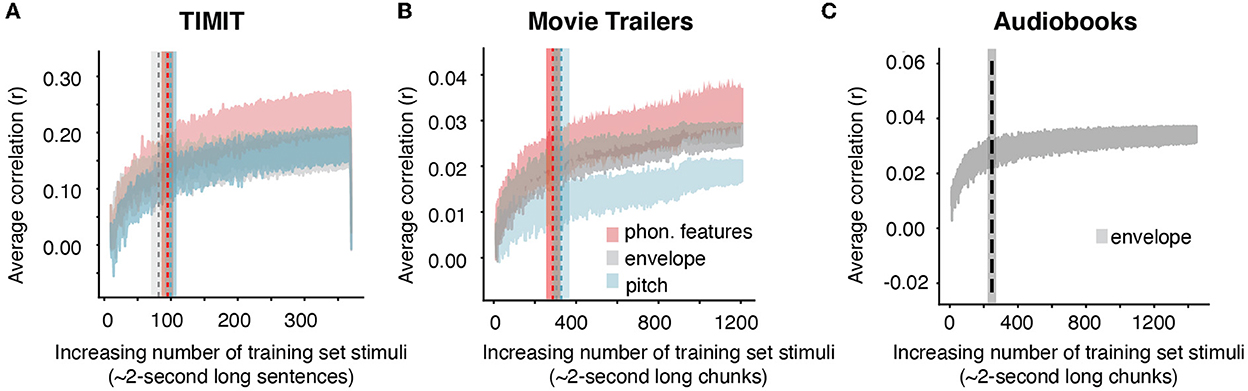

Thinking about building neuroscience experiments that are ecologically valid is important, especially when working with children or clinical populations who may not be able to withstand long and tedious tasks. A follow up study utilized the same movie trailer and sentence listening stimuli to answer a fundamental methodological question: how much data does one need to collect to trust the fidelity of the results when using naturalistic stimuli? When working with clinical populations or children, an experimenter may be limited in the amount of time they have for data collection. In the 2021 JNeuro study, we investigated acoustic and phonetic selectivity measured with EEG collected over the span of one hour. We wondered, however, whether their results would still be as reliable with a shorter experimental session. Myself and colleagues in the Hamilton lab have reported considerations for the amount of data needed and provide some suggestions for future developing natural speech experimental paradigms.

In this study, we used the same movie trailer and TIMIT speech sentences in addition to openly published existing EEG dataset where Broderick et al. 2018 had participants listened to audiobooks, another form of naturalisitic speech stimuli. We conducted the same encoding model by chunking our training set data into 2-second long segments. The size of the data subset started with 10 random sentences for TIMIT, or 10 2-s chunks of movie trailers or audiobook stimuli. This chunk size was chosen as it is similar to the average TIMIT sentence length. After fitting the models on a subset of data, the size of the training set was gradually increased (by 1 random TIMIT sentence at a time or a random 2-s chunk of movie trailers or a random 2-s chunk of audiobooks). For each training set size, we used a 10-fold bootstrapping procedure so that the particular 2-s chunks included in the training set were sampled randomly from the original training dataset.

One method of evaluating model performance is by examining the correlation coefficient. Assessing the correlation value from the model can show a qualitative stability of model weights, it does not show whether the fitted weights themselves are similar and stable when using more or less training data. In order to look at how the weights stabilize over time when adding more training data, we plotted the receptive fields at each iteration of adding increasing amounts of training set data for the TIMIT and movie trailer stimuli. Examples are shown in the videos below for one subject: